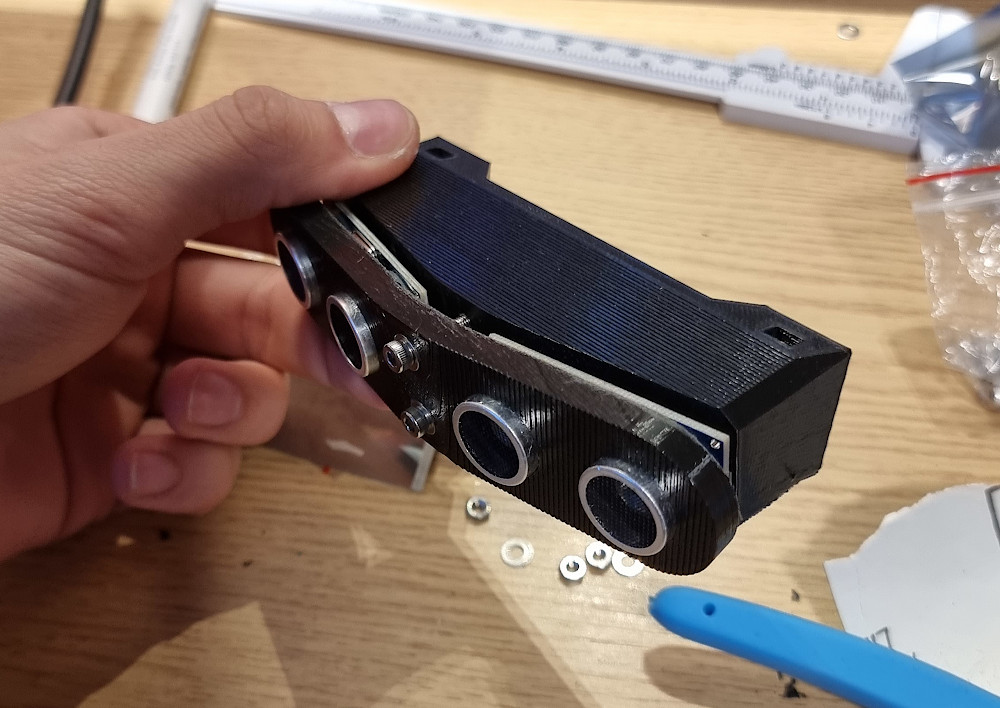

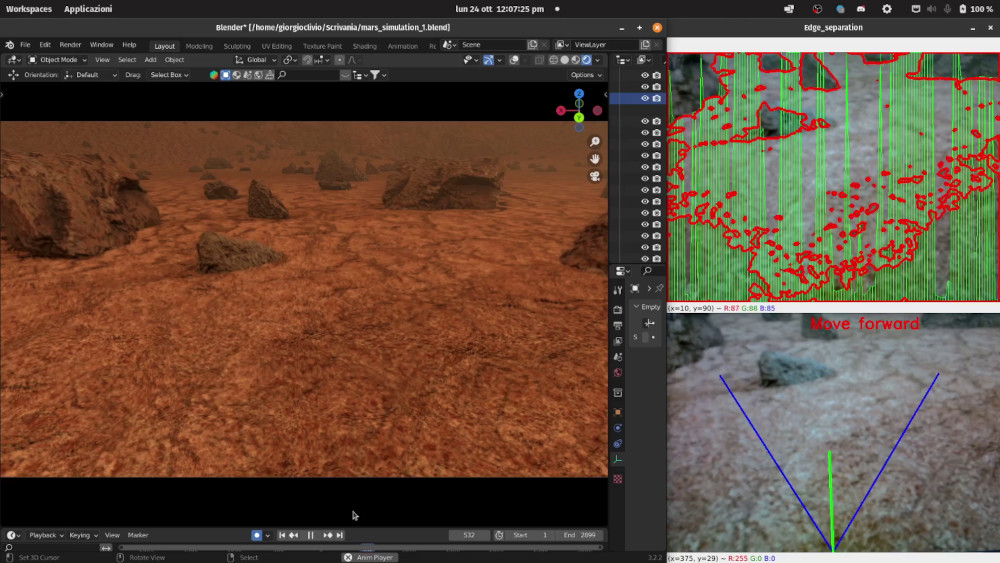

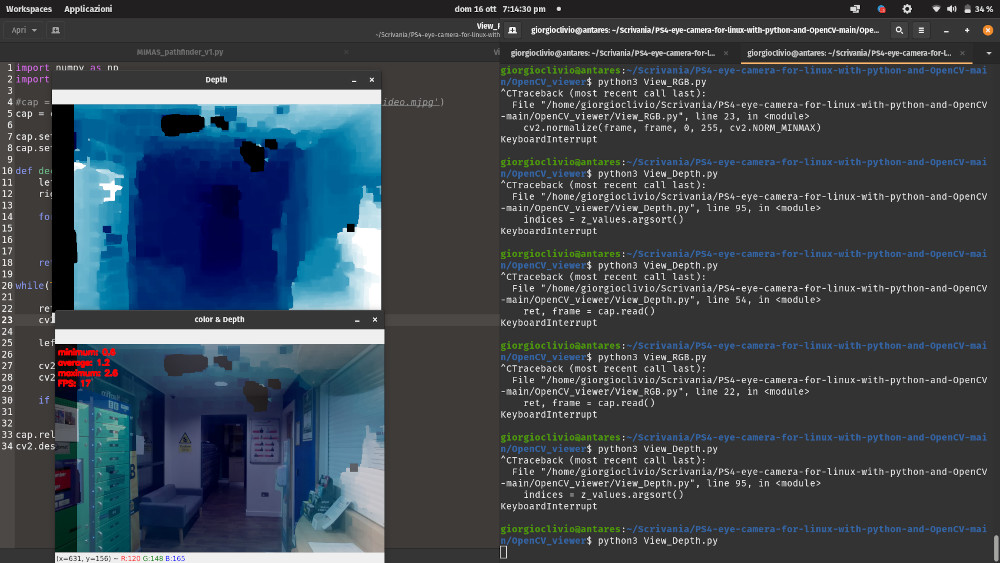

Stereovision is quite an important part of Mimas: it is not really compulsory, but it may massively facilitate autonomous navigation if running a good algorithm. In addition, stereo cameras can be used to take pictures that can be "sent to Earth" (or in other words, to my PC). Using a pair of red-and-blue glasses, it is possible to obtain an old-fashioned 3D photo effect. As mentioned in previous posts about Computer Vision, a PS4 camera was selected to be assembled on the Mast Tower. This required designing a custom enclosure for the cameras' motherboard and conducting some tests before assembling it to the bottom of the Imager. These tests were run in the open air, placing the stereo cameras on top of a tripod.

- [Hours of work: 2]

- [People involved: Giorgio]