From Bandits to SPARCbandit: Toward Interpretable Learning

In May, development efforts shifted from low-level control to high-level policy learning, with the design and formalisation of SPARCbandit: a lightweight, explainable reinforcement learning agent tailored for planetary exploration. What began in late 2024 as a series of experiments with contextual multi-armed bandits evolved into a formal proposal and implementation framework suitable for conference submission. SPARCbandit - short for Stochastic Planning & AutoRegression Contextual Bandit - was designed to address the limitations of previous contextual bandit implementations. Where earlier versions were reactive and short-sighted, SPARCbandit introduced several critical features:

- Eligibility Traces (TD(λ)) to propagate credit over time, enabling the agent to associate delayed rewards with prior decisions.

- Adaptive Cost Tracking, using a baseline and autoregressive deviation filter to account for energy consumption, sensor drift, and terrain variance.

- Latching Mechanism, allowing user-specified action preferences (e.g., preferred gaits) to remain in contention despite short-term suboptimality.

- Planning via Dyna-style Updates, where real experiences are augmented with simulated transitions from a learned one-step model.

- Action Selection with Cost-Aware Filtering, combining exploration (ε-greedy) and robustness under non-stationary conditions.

These features were incorporated into SPARCbandit v16, the most stable and refined version of the architecture to date. It supported both sequential learning (TD updates) and bandit-style decision-making, with Q-values parameterised using linear functions over Fourier basis features for interoperability. At the same time, I began preparing the associated conference paper for iSpaRo 2025, outlining the theoretical motivation, implementation details, and performance benchmarks of SPARCbandit. The emphasis was not on raw performance - Deep RL models would outperform it in that domain - but rather on transparency, resource-awareness, and deployability on constrained hardware like the Raspberry Pi 4 aboard Continuity. By the end of May, the core implementation had been tested on benchmark environments such as LunarLander and adapted to my own simulation framework. The algorithm showed stability, robustness to noise, and meaningful cost-sensitive behaviour - all while retaining traceable decision logic, which is essential for real-world deployment in mission-critical systems.

A Constraint-Aware Bandit

In February, the control architecture for Continuity took a substantial step forward with the formal integration of the bandit-based decision layer into the QuectoFSM state machine framework. This was the first implementation of what I termed Constrained Local Learning (CLL): a structure where bandit-based exploration is actively gated by contextual rules, and actions are only permitted when predefined safety and state conditions are satisfied. The multi-armed bandit (MAB) component, now in version 3, moved beyond basic reward selection and hard-coded action cycling. Instead of optimising only low-level parameters (e.g., step height or cycle duration), the new system could also select from a list of gait patterns, each encoded as an arm with an associated performance history. To regulate instability, the bandit’s reward function was overhauled. The original inverse formulation:

was replaced by a log-scaled penalty system, better suited for sharply discouraging large attitude deviations while maintaining sensitivity around small corrections:

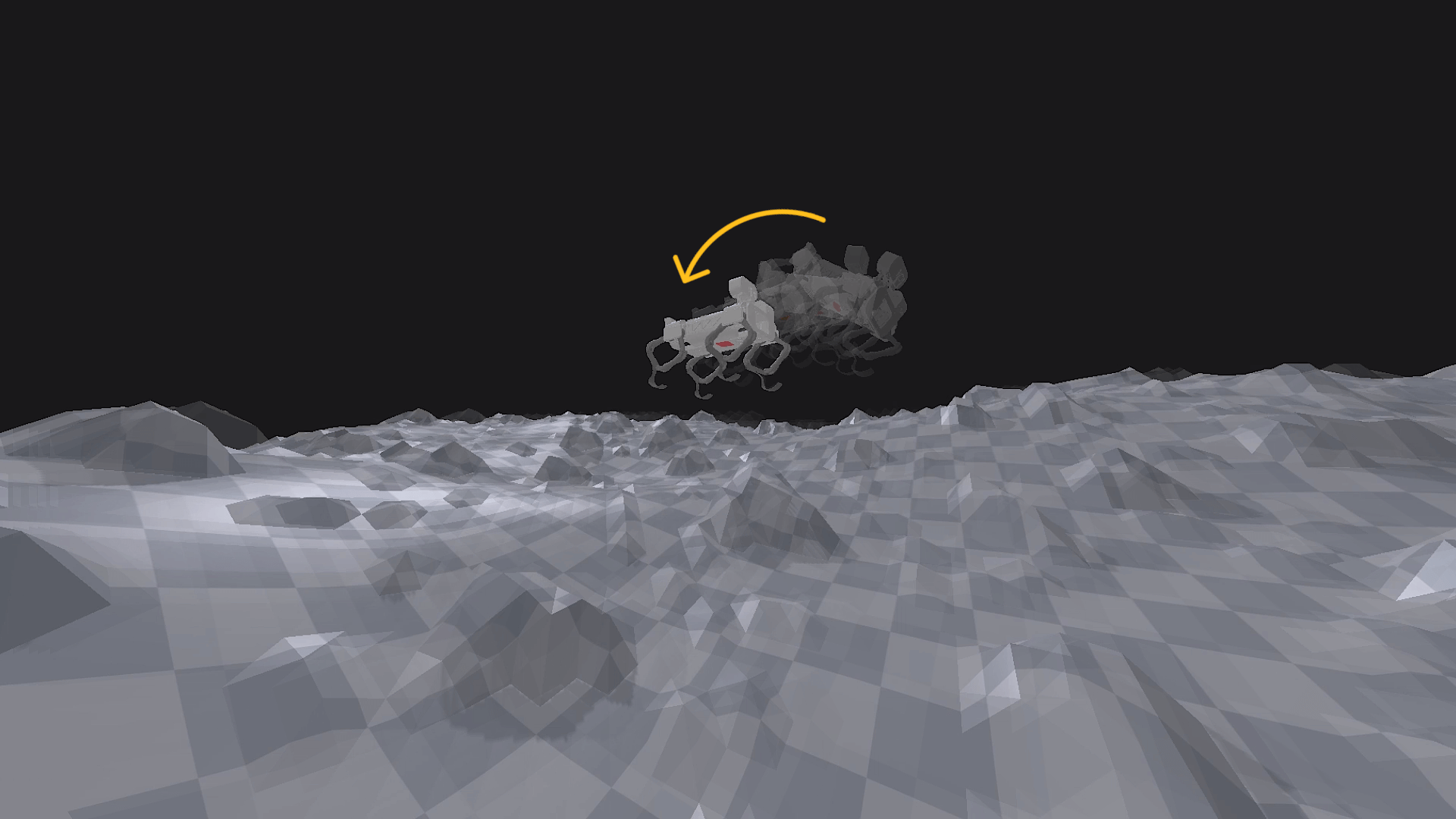

Reorientation and Bandit-Based Autonomy

Watch: Self-reorientation attempt using Contextual MAB

Watch: Self-reorientation attempt using Contextual MAB

During November and December, the project's focus shifted toward a more abstract - but mission-critical - aspect of legged mobility: attitude control and self-reorientation in microgravity conditions. While locomotion on uneven terrains remained a long-term goal, this phase initiated the development of strategies for reorienting a free-floating rigid body using only internal leg motions, without relying on external contacts or torque sources. This involved modelling Continuity as a non-holonomic system in microgravity, where angular momentum is conserved, and reorientation must occur through the redistribution of internal masses - specifically, the four legs. The problem setup mirrors the classical "falling cat" scenario, where a free-floating body must execute sequences of internal movements to rotate in space despite zero net external torque.