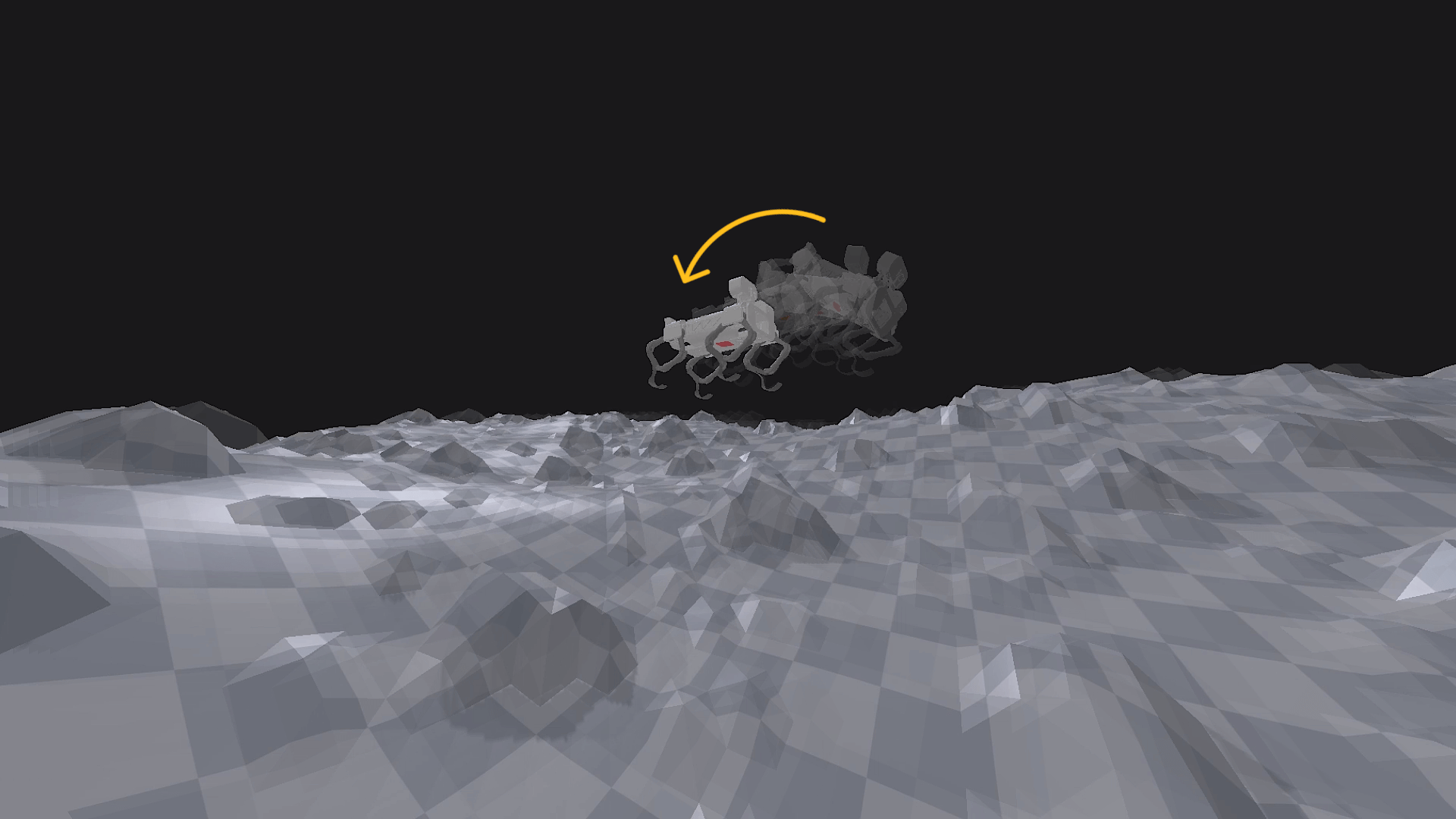

Watch: Self-reorientation attempt using Contextual MAB

Watch: Self-reorientation attempt using Contextual MAB

During November and December, the project's focus shifted toward a more abstract - but mission-critical - aspect of legged mobility: attitude control and self-reorientation in microgravity conditions. While locomotion on uneven terrains remained a long-term goal, this phase initiated the development of strategies for reorienting a free-floating rigid body using only internal leg motions, without relying on external contacts or torque sources. This involved modelling Continuity as a non-holonomic system in microgravity, where angular momentum is conserved, and reorientation must occur through the redistribution of internal masses - specifically, the four legs. The problem setup mirrors the classical "falling cat" scenario, where a free-floating body must execute sequences of internal movements to rotate in space despite zero net external torque.

To test this, I implemented a simplified dynamic model in PyBullet:

- The robot’s legs were treated as two-DoF articulations (extension/retraction and swing),

- The main body was defined as a floating base with mass and inertia properties,

- And the physics engine was configured for zero gravity, isolating internal dynamics.

Initial attempts at reorientation used hard-coded sequences of leg movements. However, it quickly became apparent that optimising these sequences manually was not scalable, especially in the presence of noisy IMU data and numerical instability from Euler-based integration in PyBullet. This led to the implementation of a contextual multi-armed bandit architecture, supervised by the emerging QuectoFSM state machine. In its early form, the bandit algorithm selected predefined leg movement patterns (arms), with the reward computed as:

where

The context space was derived from quantised roll and pitch angles (with a resolution ∆ = 2°), allowing the MAB to learn which motion sequences reduced deviation from a horizontal orientation. Leg movement sequences were mapped to combinations of joint commands, where tibia angles were derived from femur positions using the following parameterisation:

These were then used to simulate reactive control strategies in a 240 Hz physics loop, monitored in real time for oscillations, overcorrections, or divergence. Reward stagnation and instability triggered simulation resets, often due to bouncing or accumulating integration errors, helping the agent explore new regions of the control space without discarding learned rewards. While the simulation remained fragile (partly due to PyBullet’s poor handling of angular momentum in closed-kinematic chain systems), by the end of December, the system showed the first consistent roll and pitch corrections without any contact with the environment, entirely driven by inertial leg movement.